Deep Language Identification

Introduction

The DeepLanguage project was part of my second-year computer science studies at ENSC, it was a solo computer science related project. We were free to choose which language to use and which topic to work on. The objective was to develop an artificial intelligence capable of identifying spoken languages from short audio clips. With the rise of voice assistants like Siri and Alexa, as well as the need to transcribe and translate text into different languages, language identification is becoming increasingly important.

The project’s goal was to design a system that could analyze spoken language and identify whether the audio was in French, English, German, or Spanish. The project presented several challenges, including processing large datasets, developing an effective machine learning model, and creating a user-friendly web application to test the algorithm.

Concept and Approach

The DeepLanguage system is based on speech processing and machine learning principles. The challenge was to teach an artificial neural network how to distinguish between different languages using digital audio data.

I started by gathering a large dataset of short audio clips using Mozilla’s Common Voice dataset, which provided thousands of recordings in the four target languages. These clips were processed and labeled to ensure even distribution among languages.

Once the dataset was prepared, I moved on to the feature extraction stage, focusing on MFCCs (Mel-frequency cepstral coefficients), which are widely used in speech recognition. These coefficients allowed me to create a compact representation of the sound that was resistant to variations in volume and pitch.

Technical Solution

The next step was to design and train a neural network model. After evaluating different architectures, I chose a Convolutional Neural Network (CNN) to analyze the MFCC features. CNNs are excellent for extracting patterns from structured data, and they helped us detect the subtle differences between languages.

I trained the model on 75% of the dataset and used the remaining 25% for validation and testing. The model performed well, achieving a high accuracy rate, especially for English and German. However, it struggled with distinguishing Spanish from French in some cases.

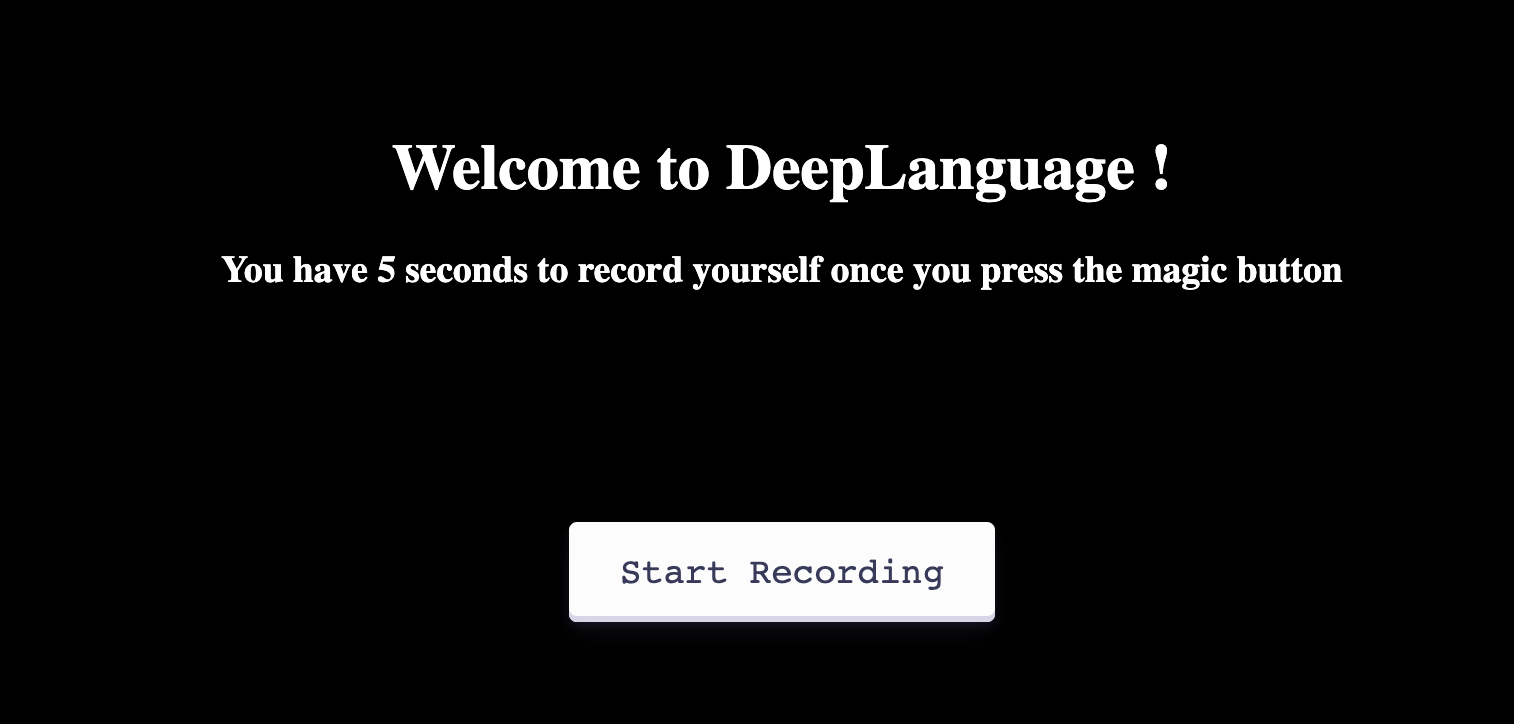

On the web development side, I created a web interface using Flask, a Python-based web framework. This allowed users to record a short audio clip and receive an immediate prediction of the language spoken.

Main page of the DeepLanguage web application.

Main page of the DeepLanguage web application.

Challenges and Improvements

Throughout the development of DeepLanguage, several challenges emerged:

Model Sensitivity: The model was sensitive to variations in the audio recordings, particularly when dealing with non-native speakers or background noise. I experimented with different methods to reduce overfitting, such as regularization and data augmentation.

Web Deployment: One of the key challenges was deploying the audio processing system online. Since the audio processing relied on Python libraries like Pydub (which required an audio card), I encountered difficulties with server-side deployment. To address this, I moved part of the audio processing to the client side using JavaScript.

Future Improvements

There are several areas where DeepLanguage can be improved:

Dataset Expansion: Increasing the diversity of the dataset by adding more languages and training the model on a wider range of accents and speech patterns. Model Optimization: Further optimizing the CNN architecture or experimenting with Recurrent Neural Networks (RNNs) to improve accuracy, especially in more difficult language pairs. Better Web Integration: Deploying the model on a scalable platform and improving the integration of audio recording and prediction into the web interface. Conclusion The DeepLanguage project provided valuable experience in applying machine learning techniques to speech recognition problems. It highlighted the importance of data quality and model architecture in AI development. Through this project, I gained a deeper understanding of AI, speech processing, and web development, and I plan to continue exploring this field.

The project also paved the way for future work, such as expanding language recognition capabilities and improving the deployment of AI models in web applications.

You can find the code and further details of the project on Github. You can also download the French report here.